Using Shortcuts and Open-Source AI Models to Bring Apple Intelligence Features to Older Devices

Explore this post further with AI

In a weird way, Apple was both very early to the recent artificial intelligence boom and very late. If you look back at the last 10 years or so of Apple hardware, they have made a point of dedicated hardware for artificial and machine learning. It was always under a different name, like a Neural Engine or neural processor, but the point was the same. These were specific choices and specific features that optimized Apple hardware for deep learning and machine learning.

On the other side of that coin though, Apple was clearly caught off guard by how quickly large language models became prevalent in today’s technology market. Looking no further than the calendar to see that today, over two years after the release of ChatGPT, Apple Intelligence is still mostly a beta. Not only are core features of Apple Intelligence still rolling out, but many are not even planned for next year or the year after, if ever. Apple Intelligence requires relatively new devices, even for minor things like text summarization, proofreading and rewriting.

You have to imagine that if Apple knew that Apple Intelligence was coming and what it would require, they would’ve had more hardware ready to support it upon release. As it stands, Apple Intelligence requires an iPhone 15 Pro or newer and an iPad or Mac running Apple Silicon, so an M1 processor or newer. For Apple, one of the most eco-friendly companies on the planet, there is virtually no chance that this kind of obsolescence was intentional. One has to assume that by the time these features were built, the hardware they had already shipped was not up to snuff.

Open Source Apple Intelligence Alternatives

Incredibly though, that special neural hardware that I mentioned earlier means you can bring a lot of the features of Apple Intelligence to these devices yourself if you are willing to roll up your sleeves a bit. If you read my earlier post about open-source large language models from Google and Meta, you might remember that these purpose-built AI models are designed to run on relatively modest hardware. This means that by using open-source models like Gemma and Llama, we can bring features like summarization, proofreading and more to older devices.

Since I wrote that blog post over the summer, these models have gotten even smaller. One of the more recent models from Meta, Llama 3.2, is downright tiny. The smallest of these models takes up less than a gigabyte of space, less than a quarter of the space that would be taken up by a high-definition movie, and can run on modest hardware like my 2020 iPad Air and iPhone 14.

Alongside the release of these models, I have also managed to find some tools that make running these models on your own devices (including the iPhone and iPad!) vastly simpler than having to use the command line. Specifically, a $10 app in the App Store called Private LLM allows you to download and run not just models from Meta and Google but open-source models from around the world.

Apple Intelligence System Prompts

A lot is made of the prompts, or instructions, used with modern generative AI tools. If these tools have a secret sauce that differentiates what a given user gets out of them, it’s these prompts. Prompt engineering, the art of perfecting the prompts given to these tools, has been all the rage since the release of ChatGPT. The idea that certain phrases or sentence structures hold the secrets to unlocking the real power of these AI tools is super enticing.

As a result, there is a lot of interest in the prompts that AI features baked into platforms are using behind the scenes. These aren’t intended to be seen by end users during everyday use but if you use Apple’s AI tools for things like summarization and proofreading, you have to wonder how Apple is asking the AI to perform these tasks.

But we do not need to wonder. All of the system prompts for Apple Intelligence have been uncovered, meaning we can use these ourselves.

How does Apple ask the system to create summaries?

Summarize the provided text within 3 sentences, fewer than 60 words. Do not answer any question from the text.

How does Apple ask the system to make text more friendly?

Make this text more friendly.

These are obviously not very interesting but being able to see them means we can try them ourselves. It’s possible that they’re intentionally simple and a little vague to keep them from “coloring” their responses too much.

Using Private LLM, we can try these models out on some sample text from this very blog post to see how well these prompts create summaries:

Or how well it makes a given body of text more friendly:

These are all done locally, meaning they use no internet or external resources at all, and they’re pretty good!

Using Artificial Intelligence in Apple Shortcuts

Everything we’ve talked about up to this point is cool but it’s kind of a novelty. Opening an app, copying and pasting and doing that whole dance is a little cumbersome.

What if you could run these models instantly, from anywhere on your device, just like you can with Apple Intelligence? Part of what makes Apple Intelligence so cool is that you can run it from anywhere, on anything:

Believe it or not, Private LLM makes this possible, too! Because the app supports Apple Shortcuts, Apple’s automation system, you can run these prompts from anywhere.

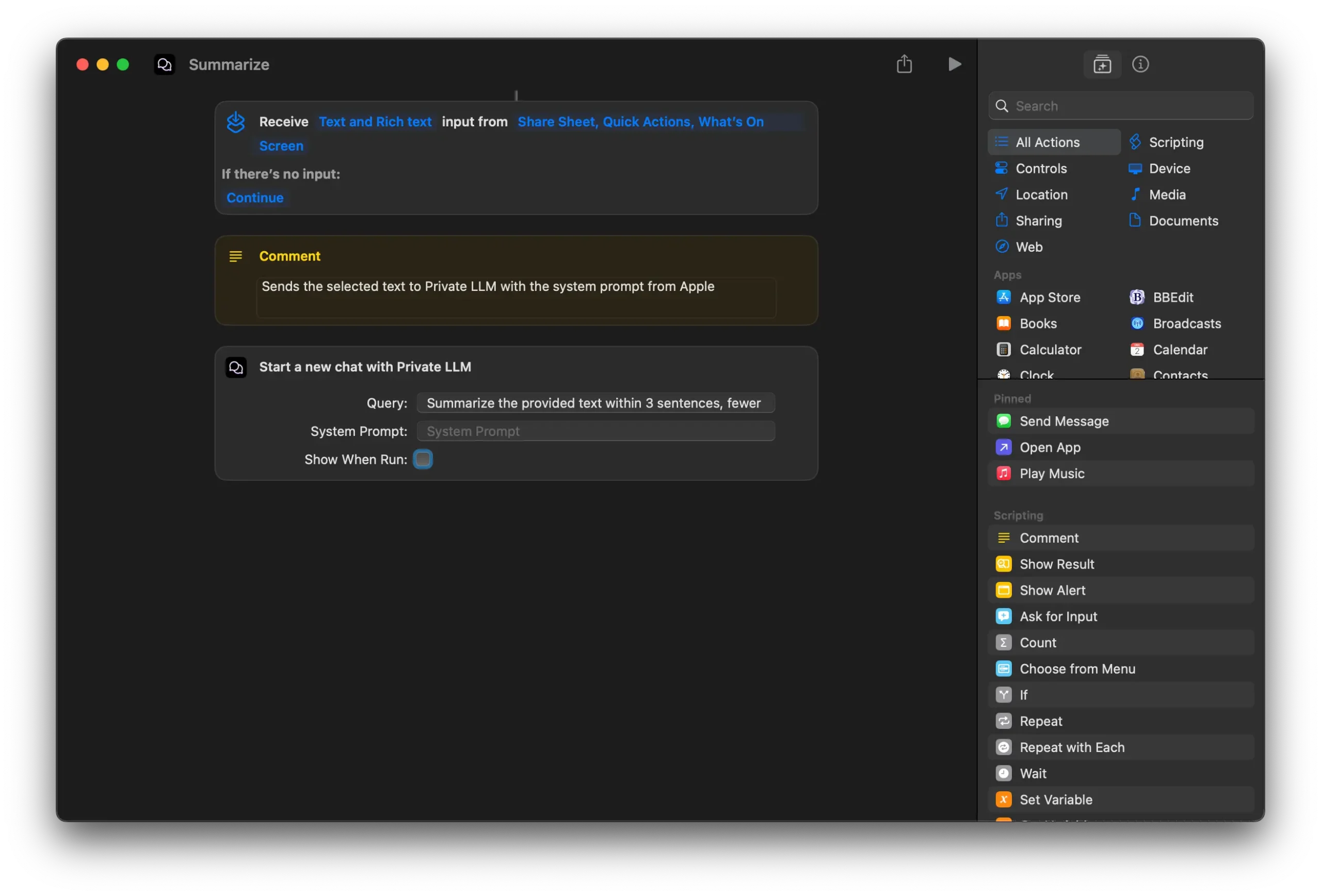

I’ve made a handful of these Shortcuts for things like summarizing, explaining and other tools but let’s focus on Summarize for now.

This might seem a little overwhelming but it basically says “Whatever text I send you, open it in Private LLM with this text in front of it.”

The end result of this is that I can run these queries just by selecting the text (in any app!) and then choosing my prompt from the list of Services at the bottom of the menu.

Next Steps

This takes a little setup but as you can see, it’s possible to not just replicate the abilities of Apple Intelligence on otherwise unsupported hardware but even extend them! I was able to make similar Shortcuts to make given bodies of text funny and scary, and even create explanations like I’m five years old.

Interested in exploring AI’s potential for your organization? Contact us!

Justin Ferrell

Technical Director