Forget ChatGPT: Run Free AI Models from Meta and Google On Your Own Computer

If you’re anything like the Digital Relativity team, you’ve spent the last year or so finding dozens or even hundreds of uses for AI in your everyday life. You might even have your own official AI usage policy like our team does. I even used Perplexity to plan my wife’s F1-themed birthday party!

While AI tools like ChatGPT, Perplexity and Gemini Pro offer powerful capabilities, they can come with costs, including subscription fees and infrastructure requirements. One critical limitation of these tools is their reliance on internet connectivity. In remote work settings or meeting rooms without internet access, these AI tools become inaccessible, limiting their utility.

While we interact with them on our phones and computers, these AI tools are almost exclusively operating in massive data centers located hundreds or thousands of miles away. Without a stable internet connection, they may as well be on the moon. This dependency on constant internet connectivity poses a significant challenge in scenarios where internet access is limited or unreliable.

Consider the following examples:

- Working from a Remote Location: In remote areas with spotty or no internet connectivity, such as remote mountain cabins or rural towns, using AI tools like ChatGPT can become impractical. The lack of internet access prevents the tools from accessing the necessary data and models stored in distant data centers.

- Traveling on a Plane: During long-haul flights with limited or expensive internet connectivity, using AI tools like Perplexity becomes cost-prohibitive. Travelers may find themselves unable to access the tools when they need them the most, such as for drafting emails or generating ideas on the go.

- Working in a Secure Environment: In certain industries, such as finance or government, strict security protocols may restrict internet access to external websites and cloud-based services. In such environments, using remote AI tools like Gemini Pro may be prohibited due to security concerns.

So what options exist in these situations? How can you take the power of these Large Language Models, or LLMs, with you on the go?

The answer, simply, is to run these models on your own device.

I know what you’re thinking.

“Justin, my laptop is not a data center.”

“Justin, I don’t have an industrial fan to point at my computer to keep it from combusting under the strain of running these tools!”

Friends, we’re going to get an LLM running on your computer today without the need of industrial computation and cooling hardware.

How Can I Run an LLM Locally?

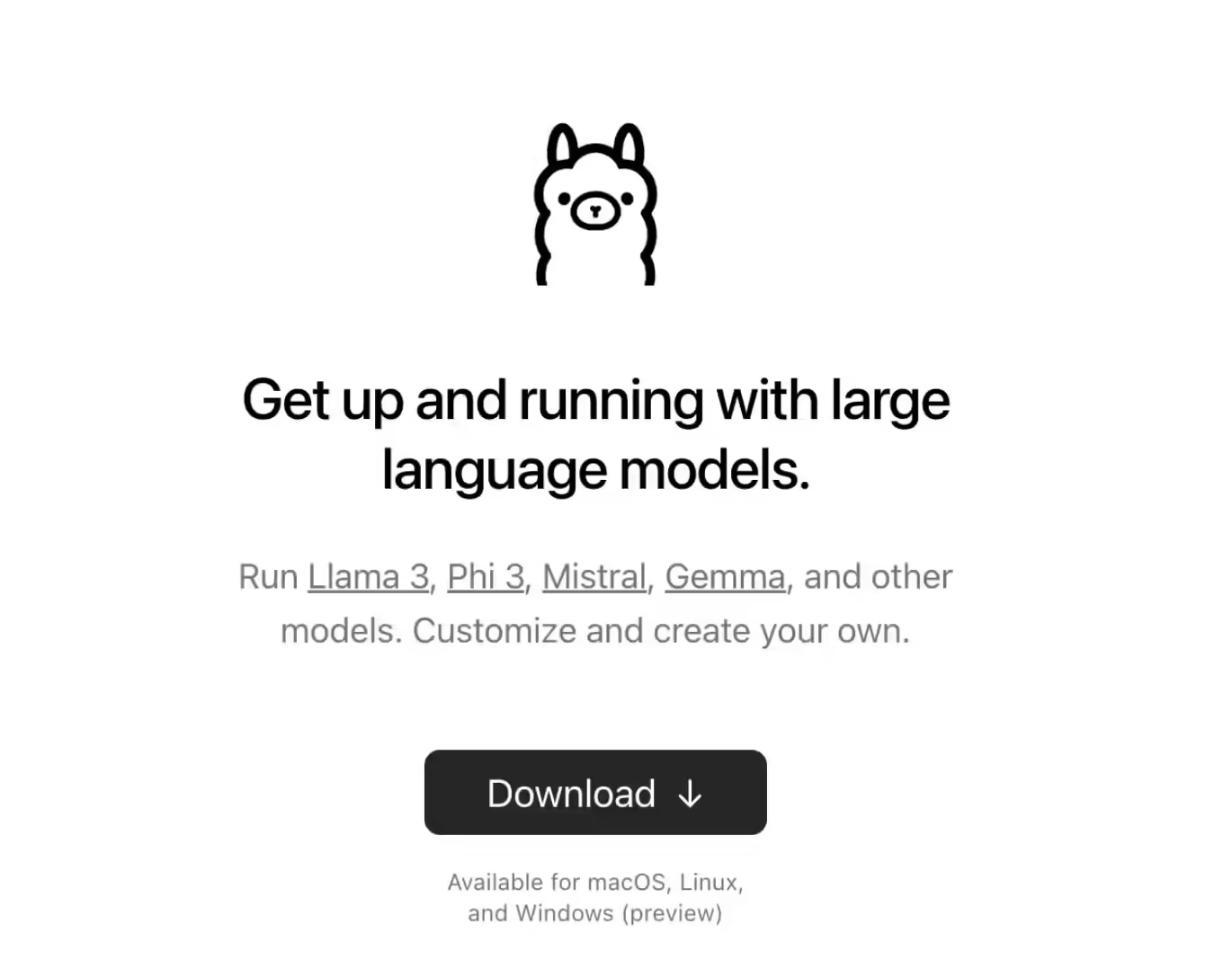

As daunting as the idea of running an AI model on your own computer may seem, it’s honestly not that hard. This is due in large part to an open-source app called Ollama.

Ollama is available for macOS and Linux right now, with a Windows version in preview. It’s easy to install and once you have it, you’ll have access to their catalog of open source AI models.

There are a ton of models in there, from companies big and small. Some are “censored” to remove the kinds of content you would expect but there are just as many that have no such limits and will generate anything you ask for, as long as it’s within their abilities.

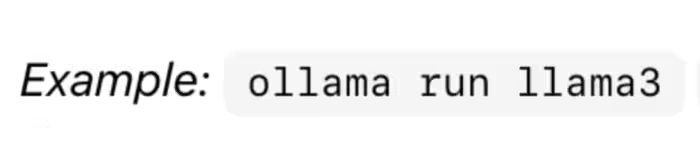

Once you install Ollama, you will interact with it via the Terminal. Again, this seems daunting but you’re basically just opening it and saying “Run model XYZ with Ollama.”

Which Popular AI Models Can I Run Locally?

For our use case, we’re going to focus on two of the largest models from the most reputable organizations in the space.

Llama by Meta

Meta recently released Llama 3, the newest version of their Llama model that powers their Meta.ai chatbot, for free. Incredibly, it is free for both research and commercial use. This means you can even build and sell new products built on top of Llama for no cost at all.

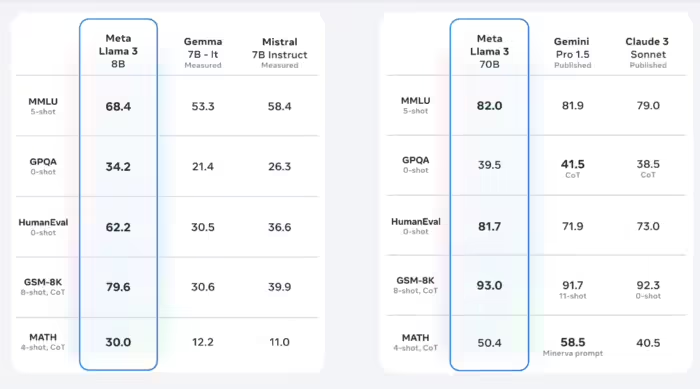

Llama is an incredibly powerful model that beats most other free and open models in standard AI benchmarks. Meta prioritized safety by making sure the model avoids generating NSFW or harmful content. This ensures a positive experience for everyone who uses it.

Llama 3 comes in two variants, 8b (for 8 billion parameters, a way of measuring the “knowledge” in the model) and 70b. The 8b model will require around 5GB of hard drive space and the 70b model will require about 40GB.

I personally prefer this model because of how well it performs in tests involving code generation.

To install Llama 3, you can simply run the default or specify the 70b parameter version.

- ollama run llama3

- ollama run llama3:70b

Either command will result in Ollama downloading and running the model. Once it’s downloaded, it will simply run when used next.

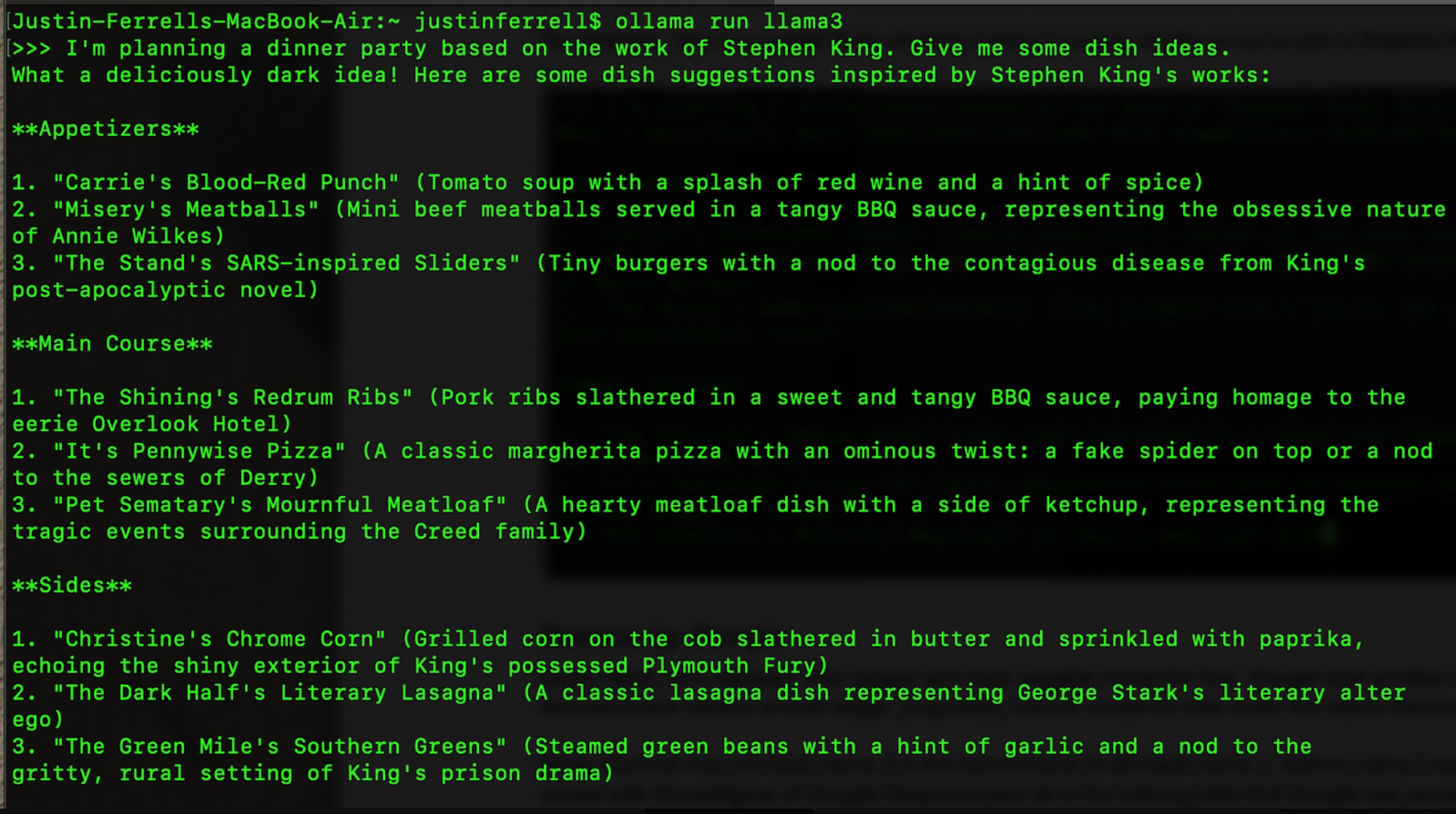

For example, here is a partial demo showing Llama 3 running locally on my computer to plan a Stephen King-themed dinner party, all with no internet:

Gemma by Google

Unlike Meta, who released their largest and most capable model for free, Google took another approach. Specifically, Google released a slimmed down version of their larger, proprietary Gemini model for free under the name Gemma.

While Gemma may not beat Llama 3 in the benchmarks (it did beat Llama 2, before Llama 3 was released) it does have its own merits. It comes with the pedigree of Google Deepmind and all of the training data that Google has access to, making it a very capable model in its own right. Like Meta, Google took care to ensure that Gemma was not only powerful but safe.

Similar to Llama 3, Gemma comes in two varieties, 7b and 2b. The 2b model will require around 2GB of hard drive space and the 7b model will require about 5GB.

To install Gemma, you can simply run the default (7b) or specify the smaller 2b parameter version.

- ollama run gemma:2b

- ollama run gemma:7b

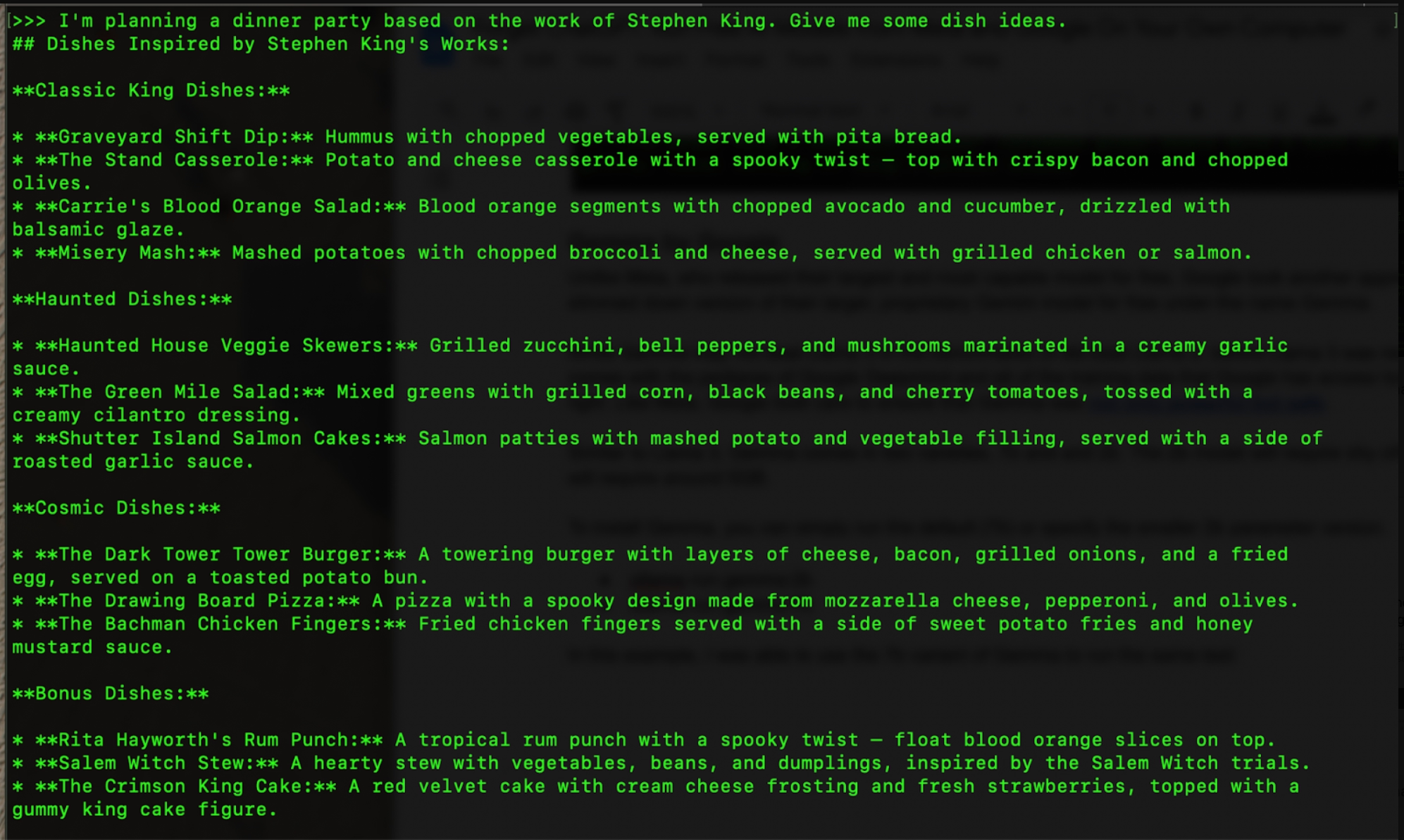

In this example, I was able to use the 7b variant of Gemma to run the same test, still with no internet:

Which Model is Better?

Again, this really seems to come down to preference. On paper, Llama 3 outperforms Gemma in every benchmark but there is something to be said for Google’s training data and breadth of knowledge. Both of these models are fully capable of most tasks and can run on very modest hardware. You can see some distinction in the personality between the two. In my opinion, Meta takes a more casual and playful approach, while Gemma delivers information in a more straightforward manner. Both of these demos above were running on an entry level M2 Macbook Air, so these models are capable of running on hardware even with no fan.

How does this help me use AI more effectively?

- Cost Savings: Avoid subscription fees and usage limits of commercial AI products.

- Availability: Use AI tools in remote locations or secure environments without internet access.

Running AI models locally empowers you to leverage AI capabilities anytime, anywhere. So, why wait? Start exploring the world of local AI models now!

Justin Ferrell

Technical Director